Researchers from Peking University, Southern University of Science and Technology, and Huawei Noah’s Ark Lab have unveiled a development named MetaMath. This innovation enhances the mathematical problem-solving prowess of Large Language Models (LLMs). Despite the considerable advancements in LLMs, they still face challenges when it comes to intricate mathematical reasoning, with models like LLaMA-2 often faltering. MetaMath aims to fill this void by refining its approach through a specialized dataset called MetaMathQA, designed explicitly for mathematical reasoning.

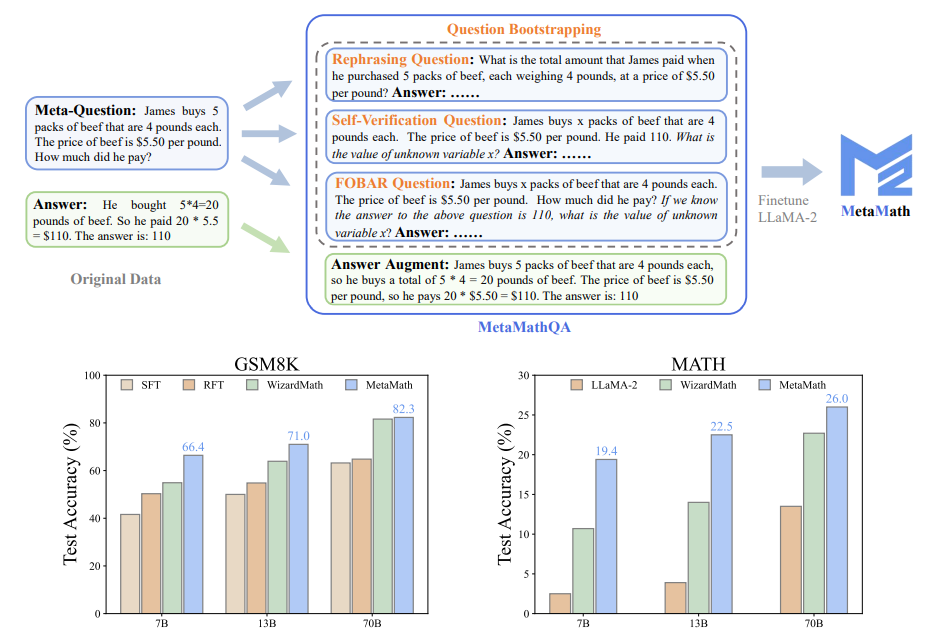

By employing a unique technique of bootstrapping mathematical questions, the researchers were able to offer multiple perspectives on a single mathematical problem, thus diversifying the training data. This strategy has paid off, with the MetaMath models showcasing results on recognized benchmarks.

Remarkably, the MetaMath-7B model surpassed several of its open-source LLM counterparts by achieving a 66.4% accuracy on the GSM8K benchmark.Another key finding of the research was the pivotal role of question diversity in the training datasets. Through their experiments, the team identified a positive correlation between the diversity introduced by bootstrapping methods and the model’s accuracy. However, it’s not just about quantity but quality. When they tried integrating external augmented datasets with MetaMathQA, the performance sometimes declined, suggesting that not all augmented data additions are beneficial.

An error analysis revealed a challenge for LLMs, including MetaMath: longer mathematical questions. While these extended questions proved more challenging, MetaMath consistently outperformed its peers, highlighting its superior capabilities.

In conclusion, the MetaMath project enhances open-source LLMs with the mathematical problem-solving skills. The implications of this research are vast, and the findings could potentially revolutionize mathematical reasoning in AI models. However, as promising as the current results are, there is still much to explore and improve upon in future research endeavors.

Check full paper: https://arxiv.org/abs/2309.12284

Reading your words was like finding a hidden gem in a vast landscape — rare, precious, and full of meaning.