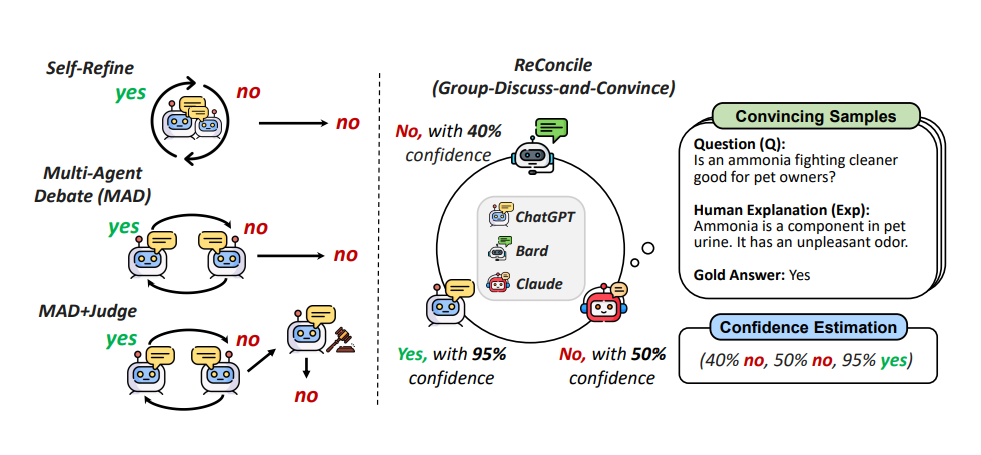

RECONCILE, a structured, multi-agent framework designed to enhance the reasoning capabilities of Large Language Models (LLMs). This framework is developed as a response to the existing limitations of LLMs in complex reasoning tasks, providing a platform for diverse LLM agents to collaboratively solve problems and reach improved consensus through structured discussions.

RECONCILE operates by initiating discussions among multiple agents, each contributing their unique insights and perspectives to the conversation. Each agent, at the outset, generates an individual response to a given problem. A series of structured discussion rounds are held, where agents refine their responses based on the insights shared by their peers, striving to reach a consensus. This process continues until a consensus is achieved, and the final answer is determined through a confidence-weighted voting mechanism among the agents.

This framework is designed to foster diverse thoughts and discussions, allowing each agent to revise their responses in light of insights from other agents, and enabling them to convince their peers to improve their answers. It is implemented with state-of-the-art LLMs such as ChatGPT, Bard, and Claude2 and has demonstrated significant enhancements in the reasoning performance of these agents on various benchmarks, surpassing prior single-agent and multi-agent baselines.

Notably, RECONCILE has also demonstrated its efficacy when implemented with GPT-4, a more advanced model, as one of the agents. In this configuration, RECONCILE not only improved the overall performance of the team of agents but also significantly enhanced the initial accuracy of GPT-4 by an absolute 10.0%. This indicates the potential of the framework to improve even the most advanced models through collaborative discussions and mutual feedback from diverse agents.

Notably, RECONCILE has also demonstrated its efficacy when implemented with GPT-4, a more advanced model, as one of the agents. In this configuration, RECONCILE not only improved the overall performance of the team of agents but also significantly enhanced the initial accuracy of GPT-4 by an absolute 10.0%. This indicates the potential of the framework to improve even the most advanced models through collaborative discussions and mutual feedback from diverse agents.

The experimental results on multiple reasoning datasets, involving both commonsense and mathematical reasoning, have shown that RECONCILE improves upon prior methods and outperforms GPT-4 on some benchmarks. It has also been observed that RECONCILE achieves better and faster consensus between agents compared to a multi-agent debate baseline, making it a more efficient framework for enhancing the reasoning capabilities of LLMs.

RECONCILE represents a thoughtful approach to solving complex reasoning problems by leveraging diverse insights and external feedback from different model families. It holds promise for future advancements in AI, offering a structured and efficient way to combine the strengths of diverse Large Language Models to achieve refined solutions to complex problems.

Read paper: https://arxiv.org/abs/2309.13007

Hi there it’s me, I am also visiting this web page regularly, this web page is truly fastidious and the visitors are in fact sharing nice thoughts.