In evolving technological landscape, Large Language Models (LLMs) like OpenAI Codex are reshaping the learning paradigms. A recent study explores the dynamics between programmers and LLMs, offering valuable insights into the usage patterns, potential challenges, and the implications of such interactions on learning programming.

The study focused on 33 learners, aged 10-17, navigating through Python coding tasks using OpenAI Codex as an AI Code Generator. The findings from this exploration shed light on how learners incorporate LLMs in their coding endeavors and impact on their coding proficiency.

The learners exhibited four distinctive approaches to integrate LLMs into their coding practices: AI Single Prompt, AI Step-by-Step, Hybrid, and Manual. Among these, the AI Single Prompt approach was predominant, where learners used Codex to generate entire solutions with a single prompt. This approach showed a consistent negative correlation with post-test evaluation scores, suggesting potential barriers to learning.

Conversely, the Hybrid approach, a combination of manual coding and utilization of AI-generated code, revealed promising positive correlations with post-test evaluation scores. This suggests that a balanced approach to using LLMs can potentially lead to a deeper understanding of coding concepts and principles.

The study also underscored the potential drawbacks of relying heavily on LLMs. It revealed instances of overt reliance on AI code generators, leading to a potential compromise in the ability to author code autonomously. The learners also faced challenges due to vagueness and underspecification in prompts, resulting in incorrect or incomplete AI-generated code, highlighting the importance of clear and precise communication of coding intent.

From an educational standpoint, the incorporation of LLMs raises concerns around academic integrity and plagiarism, and there is a growing need to address these issues proactively. The study reported instances where the introduction of AI-generated code led to the generation of solutions that were outside the current curriculum. This raises pertinent questions about the appropriateness and the right time to introduce such advanced tools to novice learners.

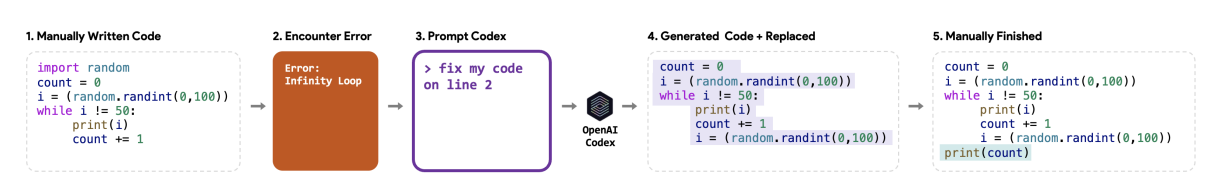

The crafting of prompts and the verification of AI-generated code emerged as crucial elements in the learning process. The study observed varied approaches to prompt crafting, ranging from direct copying from task descriptions to independent formulation of prompts. The clarity and specificity of these prompts were crucial, impacting the accuracy and relevance of the generated code.

Some learners displayed commendable self-regulation by manually verifying and modifying the AI-generated code to ensure its correctness and to gain a deeper understanding of the solutions. These findings emphasize the importance of informed and balanced usage of advanced tools to optimize learning outcomes and mitigate risks related to over-reliance and integrity.

In conclusion, the integration of AI code generators like OpenAI Codex is shaping the future of education, necessitating adaptations in curriculum and tool development strategies. The findings of this study provide initial insights and pave the way for further research into effective learning methodologies involving AI code generators. Balancing the use of advanced tools and fostering a holistic understanding of coding principles are essential to leverage the advancements in AI and enrich the learning experience in the intricate landscape of learning in the age of artificial intelligence.

Read paper: https://arxiv.org/abs/2309.14049